Condor has traditionally only been available as binaries, most of the time this is fine. We successfully run the Mac OS X PowerPC & Intel binaries and for openSUSE 10.x use the Red Hat 9/dynamic binaries. However for 64-bit openSUSE the binary (RH5) doesn't really work, it struggles to start up. Since the release of condor 7.x they have included the source, so that seemed a sensible avenue to explore. It does compile, but it is a bit more involved than just configure; make; make install!

These instructions should hold true for 7.0.0, 7.0.1 & 7.0.2 as well.

Grab the

source for the latest stable or development release (I personally go with the development release).

First off glance through the

README, check you have all the prerequisites. I also found I needed to add

termcap,

terminfo,

ncurses-devel and

flex (grab them from yast).

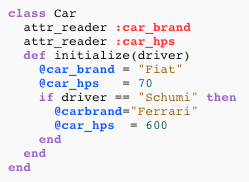

Now lets start configuring:

cd src./build_init./configure --disable-gcc-version-check --disable-full-port --without-classadsThe

configure flags mean:

- Our gcc version is newer than the built-in checks.

- No standard universe/checkpointing. Standard for a new OS port not to have these.

- ClassAds not yet supported (no condor_q -better-analyze).

You will get an error:

configure: error: Condor does NOT know what glibc external to use with glibc-2.6.1To get around this edit

configure.ac with your favourite editor. Around line 2500 add this option for the

case statement:

"2.6.1" ) # openSUSE 10.3including_glibc_ext=NO;;Now run

build_int and

configure again as above.

If that runs you can then execute

make. Sit back as this may take a while!

If there is a problem, typically on the externals part view the log which will be indicated. You may need to install something (such as the packages I mentioned earlier).

Compilation is finished when something like this pops up (and no obvious errors)

make[1]: Nothing to be done for 'all'.make[1]: Leaving directory '/home/build/condor-7.1.0/src/condor_examples'Everything is compiled so now prepare the release:

make release (output to

release_dir, dynamically linked with debugging, ready for testing)

make public (output to

../public, add stripped dynamic/static linked binaries and no debugging)

Find your final installation bundle in

../public as

condor-7.1.0---dynamic.tar.gz. Unpack and use it as you normally would.

condor_version, will reveal your custom compile:

$CondorVersion: 7.1.0 May 21 2008 $$CondorPlatform: X86_64-LINUX_SuSE_UNKNOWN $Need help/advice? There is an excellent

presentation which covers this as well as the users

mailing list which is both active and helpful.

Hopefully in the future condor will have better support for openSUSE so that a full port (standard universe/checkpointing) and ClassAds will be available.

Good luck!

Mac OS X Leopard comes with Apache2 and PHP pre-installed, but no MySQL. This is the missing link in the very useful MAMP setup. The kind people at mysql.com offer it ready packaged for mac users. When picking your version, MacIntel users pick the 32-bit version, unless you want problems installing Perl's DBD::mysql. What is DBD::mysql? the very useful module that allows Perl scripts to access your MySQL databases. If you install the 64-bit version of MySQL you will hit errors when you install DBD::mysql as a 32-bit installation of Perl is not interested in compiling against 64-bit MySQL libraries.

Mac OS X Leopard comes with Apache2 and PHP pre-installed, but no MySQL. This is the missing link in the very useful MAMP setup. The kind people at mysql.com offer it ready packaged for mac users. When picking your version, MacIntel users pick the 32-bit version, unless you want problems installing Perl's DBD::mysql. What is DBD::mysql? the very useful module that allows Perl scripts to access your MySQL databases. If you install the 64-bit version of MySQL you will hit errors when you install DBD::mysql as a 32-bit installation of Perl is not interested in compiling against 64-bit MySQL libraries.